#include <InferenceEngine.hpp>

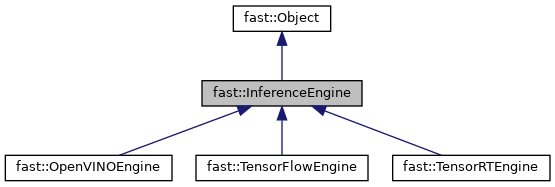

Inheritance diagram for fast::InferenceEngine:

Inheritance diagram for fast::InferenceEngine: Collaboration diagram for fast::InferenceEngine:

Collaboration diagram for fast::InferenceEngine:Classes | |

| struct | NetworkNode |

Public Types | |

| typedef std::shared_ptr< InferenceEngine > | pointer |

Public Types inherited from fast::Object Public Types inherited from fast::Object | |

| typedef std::shared_ptr< Object > | pointer |

Public Member Functions | |

| virtual void | setFilename (std::string filename) |

| virtual void | setModelAndWeights (std::vector< uint8_t > model, std::vector< uint8_t > weights) |

| virtual std::string | getFilename () const |

| virtual void | run ()=0 |

| virtual void | addInputNode (uint portID, std::string name, NodeType type=NodeType::IMAGE, TensorShape shape={}) |

| virtual void | addOutputNode (uint portID, std::string name, NodeType type=NodeType::IMAGE, TensorShape shape={}) |

| virtual void | setInputNodeShape (std::string name, TensorShape shape) |

| virtual void | setOutputNodeShape (std::string name, TensorShape shape) |

| virtual NetworkNode | getInputNode (std::string name) const |

| virtual NetworkNode | getOutputNode (std::string name) const |

| virtual std::unordered_map< std::string, NetworkNode > | getOutputNodes () const |

| virtual std::unordered_map< std::string, NetworkNode > | getInputNodes () const |

| virtual void | setInputData (std::string inputNodeName, std::shared_ptr< Tensor > tensor) |

| virtual std::shared_ptr< Tensor > | getOutputData (std::string inputNodeName) |

| virtual void | load ()=0 |

| virtual bool | isLoaded () const |

| virtual ImageOrdering | getPreferredImageOrdering () const =0 |

| virtual std::string | getName () const =0 |

| virtual std::vector< ModelFormat > | getSupportedModelFormats () const =0 |

| virtual ModelFormat | getPreferredModelFormat () const =0 |

| virtual bool | isModelFormatSupported (ModelFormat format) |

| virtual void | setDeviceType (InferenceDeviceType type) |

| virtual void | setDevice (int index=-1, InferenceDeviceType type=InferenceDeviceType::ANY) |

| virtual std::vector< InferenceDeviceInfo > | getDeviceList () |

| virtual int | getMaxBatchSize () |

| virtual void | setMaxBatchSize (int size) |

Public Member Functions inherited from fast::Object Public Member Functions inherited from fast::Object | |

| Object () | |

| virtual | ~Object () |

| Reporter & | getReporter () |

Protected Member Functions | |

| virtual void | setIsLoaded (bool loaded) |

Protected Member Functions inherited from fast::Object Protected Member Functions inherited from fast::Object | |

| Reporter & | reportError () |

| Reporter & | reportWarning () |

| Reporter & | reportInfo () |

| ReporterEnd | reportEnd () const |

Protected Attributes | |

| std::unordered_map< std::string, NetworkNode > | mInputNodes |

| std::unordered_map< std::string, NetworkNode > | mOutputNodes |

| int | m_deviceIndex = -1 |

| InferenceDeviceType | m_deviceType = InferenceDeviceType::ANY |

| int | m_maxBatchSize = 1 |

| std::vector< uint8_t > | m_model |

| std::vector< uint8_t > | m_weights |

Protected Attributes inherited from fast::Object Protected Attributes inherited from fast::Object | |

| std::weak_ptr< Object > | mPtr |

Additional Inherited Members | |

Static Public Member Functions inherited from fast::Object Static Public Member Functions inherited from fast::Object | |

| static std::string | getStaticNameOfClass () |

Detailed Description

Abstract class for neural network inference engines (TensorFlow, TensorRT ++)

Member Typedef Documentation

◆ pointer

| typedef std::shared_ptr<InferenceEngine> fast::InferenceEngine::pointer |

Member Function Documentation

◆ addInputNode()

|

virtual |

◆ addOutputNode()

|

virtual |

◆ getDeviceList()

|

virtual |

Get a list of devices available for this inference engine.

- Returns

- vector with info on each device

Reimplemented in fast::OpenVINOEngine, and fast::TensorFlowEngine.

◆ getFilename()

|

virtual |

◆ getInputNode()

|

virtual |

◆ getInputNodes()

|

virtual |

◆ getMaxBatchSize()

|

virtual |

◆ getName()

|

pure virtual |

Implemented in fast::OpenVINOEngine, fast::TensorRTEngine, and fast::TensorFlowEngine.

◆ getOutputData()

|

virtual |

◆ getOutputNode()

|

virtual |

◆ getOutputNodes()

|

virtual |

◆ getPreferredImageOrdering()

|

pure virtual |

Implemented in fast::OpenVINOEngine, fast::TensorFlowEngine, and fast::TensorRTEngine.

◆ getPreferredModelFormat()

|

pure virtual |

Implemented in fast::OpenVINOEngine, fast::TensorFlowEngine, and fast::TensorRTEngine.

◆ getSupportedModelFormats()

|

pure virtual |

Implemented in fast::OpenVINOEngine, fast::TensorFlowEngine, and fast::TensorRTEngine.

◆ isLoaded()

|

virtual |

◆ isModelFormatSupported()

|

virtual |

◆ load()

|

pure virtual |

Implemented in fast::OpenVINOEngine, fast::TensorRTEngine, and fast::TensorFlowEngine.

◆ run()

|

pure virtual |

Implemented in fast::TensorFlowEngine, fast::OpenVINOEngine, and fast::TensorRTEngine.

◆ setDevice()

|

virtual |

Specify which device index and/or device type to use

- Parameters

-

index Index of the device to use. -1 means any device can be used type

◆ setDeviceType()

|

virtual |

Set which device type the inference engine should use (assuming the IE supports multiple devices like OpenVINO)

- Parameters

-

type

◆ setFilename()

|

virtual |

◆ setInputData()

|

virtual |

◆ setInputNodeShape()

|

virtual |

◆ setIsLoaded()

|

protectedvirtual |

◆ setMaxBatchSize()

|

virtual |

Reimplemented in fast::TensorRTEngine.

◆ setModelAndWeights()

|

virtual |

◆ setOutputNodeShape()

|

virtual |

Member Data Documentation

◆ m_deviceIndex

|

protected |

◆ m_deviceType

|

protected |

◆ m_maxBatchSize

|

protected |

◆ m_model

|

protected |

◆ m_weights

|

protected |

◆ mInputNodes

|

protected |

◆ mOutputNodes

|

protected |

The documentation for this class was generated from the following file:

- Algorithms/NeuralNetwork/InferenceEngine.hpp

1.8.17

1.8.17